This blog post is part of a series of reflections on the Coronavirus / Covid-19 crisis and the transition I had to operate from my face-to-face courses to online classes.

Never before have students cheated as much as at the end of this semester of study. The lockdown meant that final exams had to be held remotely, which obviously allowed for widespread cheating. Although many universities require students to sign a declaration of honour, this did not prevent an unusual amount of cheating. This raises the question of the motivation for cheating in the first place. The traditional reasons – i.e. outside of containment – are either unpreparedness or a taste for dangerous play, with cheating seen as a moral shortcut. But in these times of pandemic, we have sees that « non-cheating » students opted this time for cheating, in order to improve their grades. Indeed, in many countries, potential employers ask for details of grades obtained in studies before recruiting. Some students fear that their grades « during lockdown / online classes » are too low for their future employment, or that they will not be able to enter certain specialisation courses. This is exacerbated by the fact that in some courses, professors seem to be unaware of the constraints of distance learning, and they require their students to cram of a lot of knowledge in their heads in a very limited time (i.e. during the semester). In this case, we are not talking any more about learning, the right word being: force-feeding.

This blog post does not seek to understand – let alone excuse – cheating behaviour online, but rather to reflect on ways to avoid cheating in online exams.

Know your audience(s) / market segments 😉

The final exam confronts different populations whose interests are not necessarily aligned.

- First, there are the cheaters, who are not necessarily a homogeneous population, as indicated in the motivations given above.

- Then there are the non-cheaters, who should not be penalised by anti-fraud strategies. Indeed, the fact of multiplying controls (camera surveillance, firewalls to prevent access to certain sites) automatically leads to technological roadblocks / deadends. As a result, a bona fide student may be penalised by a surveillance system that prevents him or her from taking the exam in good conditions.

- One must also think about the proctors (assistants in charge of monitoring the students during the exam): they are officially in charge of checking that participants do not cheat, but their capacity to act is limited. Indeed, they can see that student X was helped by someone who appeared in the camera field; on the contrary, it is impossible to say what student Y was looking at on her screen: was it the exam, or a Whatsapp discussion of a cheating group?

- Finally, there is the designer of the online exam, who has his own constraints, and who has to make optimization choices (which we will detail in the following paragraphs).

My 4 golden rules of a good online exam

Here is my magic recipe, or moral compass, for the professor in charge of designing an online exam. Those laws follow a path analogous to the 3 laws of pedagogy. These 4 laws for exams (online or in-class) would be, by decreasing order of importance:

- Non-cheating students must not suffer from the anti-cheating system;

- Cheating students must be prevented, or severely restricted, in their ability to cheat, without contravening Law #1;

- The exam must be easy for the teacher to grade, without contravening Law #1 or #2;

- Finally, without contravening Laws #1, #2 and #3, the role of proctors should become incidental. Ideally, there would be no need to proctor / watch the students taking the exam. It is a bit like the notion of strong efficiency in financial markets: in a properly designed examination, it should not matter whether a student can communicate with other people to cheat, because in doing so, they would not gain any more advantage than by doing the work alone.

The main tension: time vs. personnalization

For the person designing an exam, one important variable is time. This is not only the time to design and test the exam beforehand, but also the time to grade the exam afterwards.

- At one extreme of this tension is the MCQ (multiple-choice test): for a preparation time that is not too long, the final grading time is very short. This is ideal for the lecturer who does not want to spend too much time on designing and grading the exam.

- At the other end of the spectrum, we find the exam with open-ended questions that require an essay from the student. The term essay is broad: it is not just « do you think that Man is good? », it can also be « given the situation presented above, say what you would recommend ». In this case, it takes time to design the exam, but probably less than in the case of a MCQ – one only have to find generic incisive questions. As a counterpart, the grading/ marking will require to read carefully everything the student has written, even reading between the lines. Indeed, even if the student has not necessarily used an important keyword, they may nevertheless have understood the notion, and only a fine analysis of their answer will allow to judge whether the notion is mastered or just learnt by heart without a real understanding. In this second case, the grading / feedback is therefore extremely personalised, as the student really allows us to « get inside his/her head » and see how they think.

This issue of time vs. personalisation will therefore become crucial for many professors when it comes to designing an exam.

We also have conflicts of interest concerning the time that the professor is ready to spend on exams. Let’s take the example of students who failed the exam: in most institutions, this leads to a resit exam. This resit exam therefore represents extra work for the teacher, and this represents an additional time for which the professor is usually not paid. So the professor will have to design a second exam for a handful of students, but they would not have to perform this additional work if all the students have passed the exam i.e. no one failed. Thus, in some cases, the teacher will tend to round up the final exam grades to avoid a resit exam; or they could produce a resit exam that is easy to design, quick to grade, and easy to pass. This question of extra working time is exacerbated because the resit exam might only concern a handful of students: who would want to write a 3-hour resit exam for just one person?

Thoughts and solutions for a an online exam

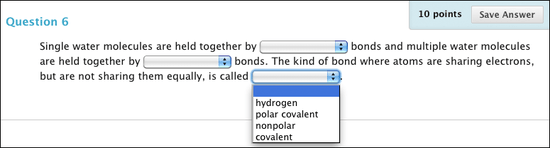

After these general thoughts, let’s contemplate the different technical solutions for online exams. For the sake of illustration, we will take the BlackBoard integrated examination system, not only because this solution is widespread in the academic world, but also because it offers many possibilities in the construction of online exams.

The wrong solution: MCQs

By MCQs we mean not only multiple-choice questions (MCQs) – with only one possible answer – but also, in general terms, multiple-answers questions (MAQs) – where there are several correct answers to be selected].

Although they appear to be an easy solution, MCQs/MAQs are not a good idea to avoid online cheating, for the reasons detailed below.

- Randomizing answers is not a solution

Blackboard allows to shuffle the order of answers within a question, in the hope of reducing communication of answers by some students. But alas, many students type very quickly: when they communicate with each other to exchange correct answers, it takes not much more time to say « answer b. « or « the answer is €12 » or « answer: because of the cost of capital ».

- Randomizing questions is not a solution

Blackboard also allows you to mix up the order of the questions, to avoid communication between students. But unfortunately, practice shows us that some students are very organised: instead of asking « what is the answer to question 7 », they ask « what is the answer on oil price? « – so it doesn’t matter that the question is in a different place in the exam. Of course, one can build a very large pool of questions to drown students: for example, 1,000 exam questions, from which the system will randomly draw 100 questions for a given student. But this poses several problems: first, having to write a large number of questions; second, making sure that the questions are of the same level of difficulty and give the same number of points – this is very tedious. The only possibility – which exists – would be not only to put the questions in random order, but also to forbid going back once a question has been answered. But this may contravene law 1: a non-cheating student may wish to go back because question 7 (for example) made him/her think of a better solution for question 3. It would not be fair to prevent them from changing a previous answer.

- Most MCQs test knowledge, not ability.

The author of this article has been in confinment with 5 young adults who are studying online. Over weeks, he has then been able to appreciate the assessment systems of different university courses. Most of the time, online exams take the form of MCQs, and most of the time, these MCQs test knowledge, not ability. Professors warn their students: « for the exam, you should know the important dates and the great names of the inventors ». The MCQ thus becomes a test of memorization and speed: for some teachers, proposing 100 questions for 1 hour of examination allows – according to them – to discriminate sufficiently the students. But does this assess their learning, or simply their ability to regurgitate information quickly? Of course, there are courses that require learning by heart (e.g. Anatomy), but these situations are rare: most of the time, courses require students to demonstrate an ability to reason, express themselves and act, and not simply repeat some concepts, like a performing dog act.

- There are problems with the scoring of MCQs.

Some courses have a rule that if students have forgotten only one correct answer, they get zero for the whole question. I consider this an aberration. If I tick 3 answers out of the 4 that were right, I should get ¾ of the points, not zero. Otherwise, there will be no discrimination between the student who has worked ¾ of the way through the subject, and the lazy person who has not studied anything – which is deeply unfair. Fortunately, most examination systems offer to give partial marks according to the number of correct answers the student gets. However, the teacher must (1) be aware of this function, and (2) be willing to apply it.

There is also the question of negative points: should a wrong answer reduce the mark for the question? Let’s imagine a question with multiple answers (MAQ): there are 5 possible answers, and the teacher indicates that there « might be » more than one possible answer (without indicating the number of correct answers). If the teacher makes the mistake of not putting negative marks, then the student only has to tick all 5 answers each time: he will be sure to get 100% of the points. So negative marks should be given for wrong answers. But the next question is more complex: how should these negative points be measured? If you tick all 5 answers, should you get a score of 0, an average score (e.g. 2.5/5) or a negative score (which will then penalise the overall score)? Opinions differ, and as is often the case, the absence of a clear-cut answer should help us ponder and reflect on this issue of negative points – and then adapt our exam accordingly.

- The answers to an MCQ must be unequivocal.

The MCQ has the advantage of an automated system: read the question, tick one or more answers, be marked automatically accordingly. But it requires questions and answers to be unequivocal. There is no room for nuance, or for finesse in interpretation. It is therefore a rather demanding literary (and logical) exercise to write a ‘good’ MCQ. In practice, I find that even for the simplest and clearest questions, some students really manage to rack their brains out of the most common context. Are they projecting more difficulties than necessary? Are they looking for a hidden trap? Often, behind its apparent operational simplicity, the MCQ underlines that it is really not so simple to test knowledge in a granular way.

Some tips gained from experience

It all starts with the MCQ

The MCQ is not the devil when you look at it in detail. You just have to look beyond the « knowledge test » stage, and try to reach the « test of a skill » stage. Here are some tips from a lot of trial and errors over past years.

- Tip 1: forget MCQs and do only MAQs.

Reminder: MCQs offer only one possible answer, whereas MAQs can offer several correct answers. My advice is to turn all MCQs into MAQs – even those with only one correct answer – for several reasons. First, it is not necessary to announce the number of correct answers. If the student is told that there is only one correct answer, he or she can proceed by elimination, or stop thinking as soon as the correct answer is identified, whereas a sentence such as « check all the correct answers » forces the student to really think about each possibility. Second, at least in BlackBoard, MCQs offer « radio button » answers while MAQs offer « check box » answers, which is to the disadvantage of MCQs. Indeed, let’s imagine the following situation: in a MCQ with radio buttons (only one correct answer), the student chooses answer A. But after thinking about it, the student is not sure, and wants to play it safe by not answering this question after all. Well, with radio buttons, it is not possible to un-click an answer: once you have clicked a button (answer A), you can just click another answer (answer B, answer C), but you cannot un-click the whole thing. As a consequence, if a wrong answer causes negative points, then students will choose not to answer questions they are unsure about. But this will only be possible with an MAQ (all boxes can be unchecked) and not with an answered MCQ (once a choice has been chosen, it is not possible to « un-answer » the question).

- Tip 2: answers should ideally check for a skill, not only for knowledge.

A MCQ / MAQ quizz is not bad per se. The professor just needs to write the question as a problem to be solved. For example: « You have to make 100 g of white chocolate and 100 g of milk chocolate. You have 200 g of cocoa butter, 100 g of sugar, 100 ml of milk, 200 g of cocoa powder. How much cocoa powder do you have left at the end of the recipe? » In this case, the student’s professional ability is being tested. It is a more subtle assessment than asking them to tick the right recipe between 4 answers. But it does not solve all the cheating problems: once the student has completed the solving process, he/she can communicate the answer quickly (« hey people, the right answer is 75 g of cocoa!! »)

Calculated formulas

This is a variant of the previous question, but with specific calculations for each student. This type of question indeed is generating random numbers. For example, one student will get « you must make 100 g of white chocolate and 100 g of milk chocolate » while another student will have « you must make 70 g of white chocolate and 120 g of milk chocolate », and BlackBoard calculates the correct answer each time. The good point is that it really prevents students from communicating their answers. On the other hand, it only tests the ability to memorise – and then apply – a formula, without judging the ability to interpret the result. Furthermore, it only works with subjects that use formulas or calculations.

Jumbled sentences

These are my favourite questions. Normally, they serve to check concepts that were learned by heart (e.g. the cubitus is [a bone / a nerve / a gland]), but they can be turned into real skills testing tools.

- Tip 1. Avoid fill-in-the-blank fields, use drop-down menus instead.

Some ‘fill-in-the-blank’ sentences offer a text field to be filled in, which means the student has to type the correct answer on the keyboard. This is not advisable, as the software only recognises the words that are declared. For example, if the question is « The early bird catches the… », the professor will have declared « worm » as the correct answer, but perhaps not « Worm » with a capital letter. A typographical error is all that is needed for the answer to be considered incorrect (« wurm »), which is not fair, especially when English is not the student’s native language. So the best solution is to use predefined drop-down menus. The advantage of those is that they offer a list of terms to choose from: no problem with typos, misspelling, or even synonyms (« insect » or « food » instead of « worm »).

- Tip 2: The answer should ideally test a skill, instead of pure knowledge.

This is the same reasoning as for the MCQs / MAQs: the professor needs to write the question in the form of a problem to be solved. The bonus here is that the sentence makes it possible to validate a set of notions. If I take the example of financial analysis (one of my courses), the problem will take the following form: « Here are the accounts of a company over the last 5 years. Calculate the return on capital employed (ROCE), break it down, and then find the correct elements in the following sentence.

The sentence then offers gaps with a drop-down list, as in this example: « The operating margin (increases; decreases; remains stable; decreases slightly), and at the same time there is an (increase; decrease; stagnation) of the NWC in days of sales which, in the end, is rather (good; bad; neutral) as far as profitability is concerned ».

This type of sentence therefore requires the student to make calculations AND make a diagnosis with causes, consequences and a value judgement on the situation. An additional advantage is that there can be several sentences: one could even imagine a whole paragraph of diagnosis to be formulated. However, there are some limitations to this tool. For one part, the writing of the blank sentences and, above all, the choice of terms in the drop-down list, must give only one possible solution. Since there must be no room for different interpretations (i.e. different sentences that could all be « right » somehow), this usually leads to fairly simple cases. Nothing prevents the professor from writing more complex sentences, but then he/she has to make sure that there is only one winning combination of the words. Additionnally, this does not totally prevent cheating: once a student has done the calculations, they can pass on the correct sentence to classmates (« The operating margin is increasing, and at the same time the NWC in days of sales is decreasing, which, in the end, is rather good as far as profitability is concerned »)

The 5th Law

All of the solutions mentioned here are interesting, because they not only offer much more possibilities than the « simple » MCQ, but above all, they allow the development of a reflection on what the teacher wants to assess.

Now there is one more important aspect: feedback. Many students want more than a grade, indeed they want to understand what they did well, and what they could have done better. A score on a MCQ is therefore not enough. We come back to the tension between the automation of marking (which suits the teacher), and the personalisation of assessment (which is the legitimate demand of the student). We can therefore add Law n°5: an ideal exam should allow students to learn from their mistakes.

Conclusion

Coming back to the objective – i.e. to prevent cheating while respecting Law 1 – no solution is 100% reliable. Once students can communicate with each other, every evaluation system – however subtle and complex – shows its flaws. And no computer system can cut off all the communication means of remote students among themselves. In other words, although the solutions mentioned have their advantages, none of them can meet the requirements of Law 4, i.e. to have an unsupervised examination. In order to have an unsupervised examination, this would require that the communication of information among students becomes useless. This means that there should be a different examination for each student. This poses obvious time problems, not only the time to design multiple exams, but also the time to grade them. As a matter of fact, there are no economies of scale if the professor is confronted with, say, 25 different exams for 25 students.

Actually, this kind of differentiated assessment (each student having their own specific questions) already exists. This is what we call individual assignments, the ones to be handed in during the semester. One solution could be to forgo final exams: evaluation of the student would be done by continuous assessment, in the form of an individualised control of abilities and skills. If we go in this direction, it would be necessary for the professors – and their institutions – to accept the additional time that this represents in the professor’s work. In other words: if we do not want to give the same task to all the students, we must acknowledge that an individualisation of the evaluations means more time to be allocated on this issue of evaluation.

All those reflections finally boil down to one generic advice: if the university / business school really wants to stick to final examinations, they should realise that no online exam is 100% reliable to avoid cheating. The best solution is to organize in-classroom examinations, with someone on site to ensure that no cheating takes place.